David Lightman is a computer whiz kid who’s dying to play a soon-to-be-released video game. He hacks into the computer company’s system and starts playing the game called “Global Thermonuclear War.” What the American teenager doesn’t realize is that he’s just inadvertently entered the mainframe computer at the U.S. Department of Defense — and it thinks David is the Russians.

This government computer, known as “the WOPR” (War Operation Plan Response), is not merely playing a game. The computer’s CPU — basically its brain — believes that the enemy is preparing to attack. As a result, the WOPR’s artificial intelligence (AI) starts preparing to launch American nukes in retaliation. It is designed to communicate directly with the missiles sitting in our silos, and since the DOD decided to take its human operatives “out of the loop,” the WOPR is now the sole entity that has the ability to “turn the launch key.”

This plot unfolded 40 years ago in a movie called War Games. What ultimately happened? We’ll get back to David vs. the WOPR in a minute. Keep it fresh in your CPU…

In recent weeks you may have heard that leaders in the artificial intelligence industry are getting very worried about the technology that they’ve been building for the past four decades. So much so that on May 29, more than 300 of them signed onto a one-sentence public statement:

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

Aside from being astonished by the casual nature, obviousness, and seeming tardiness of this news, I was also puzzled that the “risk of extinction from AI” and “nuclear war” were expressed as separate prospective events.

But I was even more surprised listening an interview Fareed Zakaria did over the weekend with Geoffrey Hinton — the man known as the “Godfather of AI.” Hinton first talked about creating the algorithm that was the original source of AI back in 1986. Yet in the next sentence, Hinton said that it was “only a few months ago” when he started becoming concerned about the future:

“It is worrying, because we don’t know any examples of more intelligent things being controlled by less intelligent things…the worry is, can we keep them working for us when they’re much more intelligent than us.”

Human beings are morons. I know this because I am one. It’s hard to believe that AI hasn’t passed us by already.

What to do? In testimony before Congress last month, the influential CEO of OpenAI, Sam Altman, suggested that Congress should regulate the industry. This is not a confidence-inspiring solution. Setting aside the fact that Congress’s track record of regulating tech is dismal, even if that could be accomplished, you have 194 other countries that would then need to follow suit. Try putting a clock on that.

When Hinton was asked what his solution would be, the Godfather meekly offered the following:

“There is no simple solution…the best that people can come up with, I think, is that you try and give these things strong ethics.”

Are you kidding me? Am I being pranked? Human beings can’t even agree on globally accepted codes of ethics. Has this AI sage ever heard of autocracies?

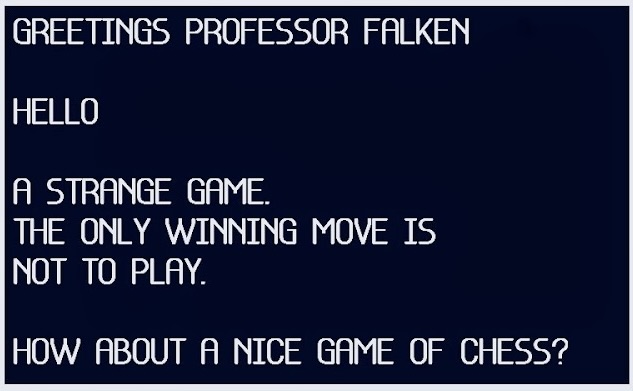

Back to War Games. At the end of the movie, the WOPR discovers the secret nuclear code, and it seems as if it’s going to launch the missiles against a superpower that has committed no aggressive action. The DOD officials look up at the massive screen in the NORAD control center, and it looks like a nuclear war between the US and the Soviet Union is under way.

But it’s not. What the WOPR is doing is playing out on the screen every single type of nuclear scenario possible — and finding the same result: mutually assured destruction. No survivors.

All of a sudden, the WOPR starts playing Tic-Tac-Toe — against itself. Flashes of grids flick on the screen at an insane pace, representing every kind of way you can play the pointless childhood game.

In the last moment of the movie, we see the huge NORAD screen go completely blank, except for the final nuclear code at the bottom. The sealed off bunker is completely quiet. Then we see two sentences print out on the screen, and we hear them being sounded aloud by the WOPR’s crackly artificial voice:

“A strange game. The only winning move is, not to play.”

The WOPR had taken in data, run some modeling, and made a judgment. It had learned. But learning can lead to all kinds of judgments; and not only good ones. Add to that the fact that “good” and “bad” are often in the eyes of the beholder.

Of course, War Games is just a movie. But it was produced in 1983 — three years before Hinton and his geniuses created that first AI algorithm. It is very hard to believe that that same brain trust did not have the imagination over the next 37 years to envision the existential problems that AI could create until 2023.

In the last question of the interview, Geoff Hinton is asked if he’s going to help to try to solve the problem. The guru’s answer:

“I think I’m too old to solve new problems. I’ve done my bit of solving problems, but I will help. But I’m planning to retire.”

Zakaria exclaims back to Hinton that he’s “leaving us all in a lurch!”

Finally, without a hint of irony, Hinton says:

“Yeah. It doesn’t look good, does it.”

Gotta be a prank.